NLP From Scratch: Translation with a Sequence to Sequence Network and Attention — PyTorch Tutorials 2.0.1+cu117 documentation

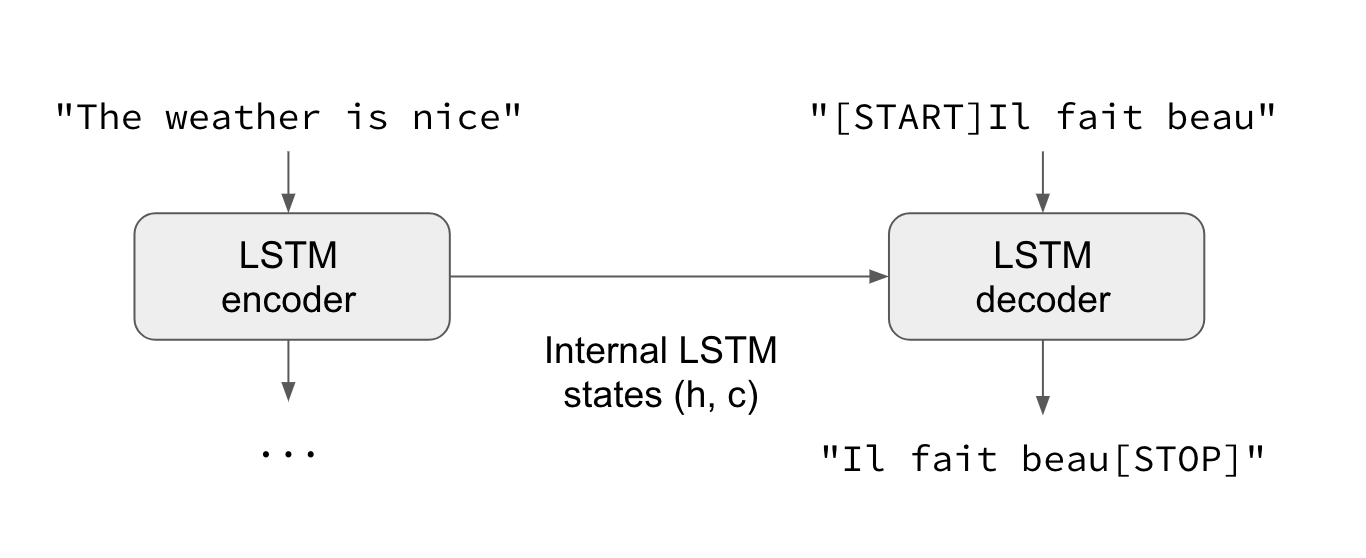

Sequence-to-sequence RNN architecture for machine translation (adapted... | Download Scientific Diagram

Understanding Encoder-Decoder Sequence to Sequence Model | by Simeon Kostadinov | Towards Data Science

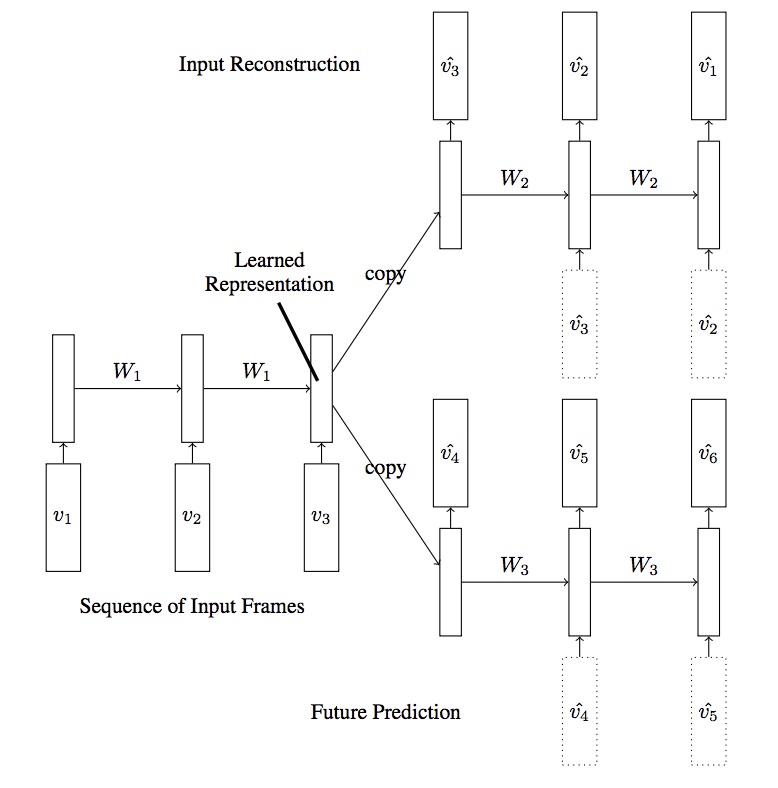

Sequence-to-sequence Autoencoder (SA) consists of two RNNs: RNN Encoder... | Download Scientific Diagram

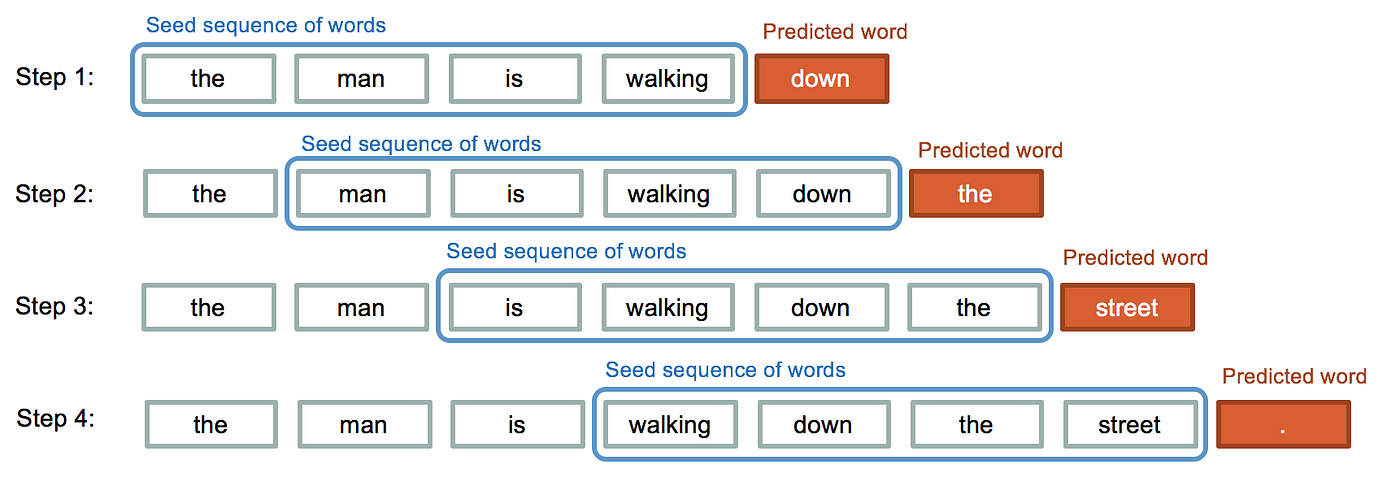

machine learning - How is batching normally performed for sequence data for an RNN/LSTM - Stack Overflow

When Recurrent Models Don't Need to be Recurrent – The Berkeley Artificial Intelligence Research Blog

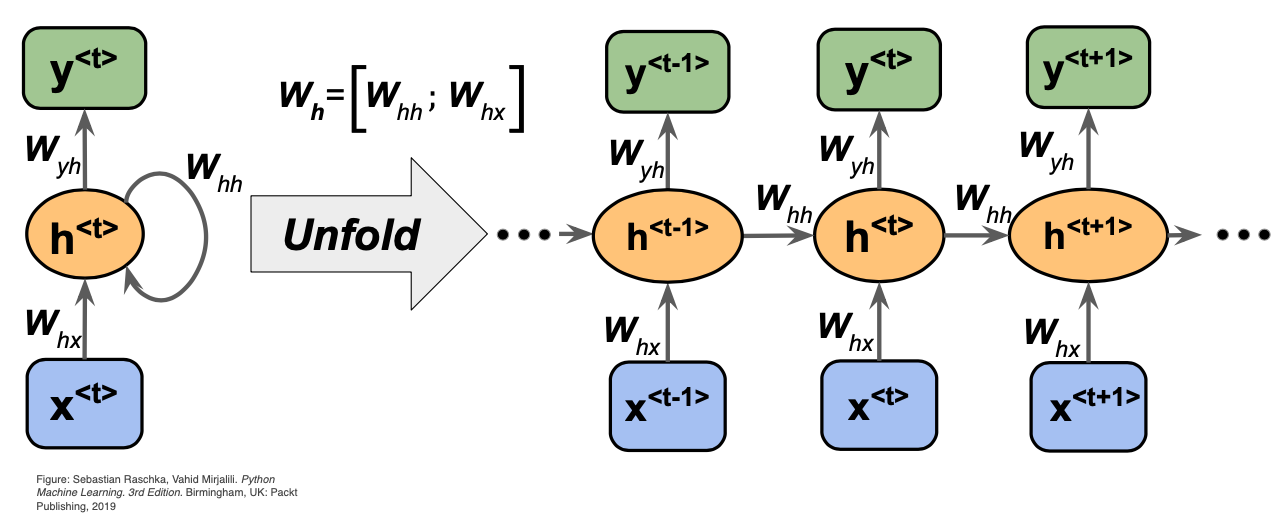

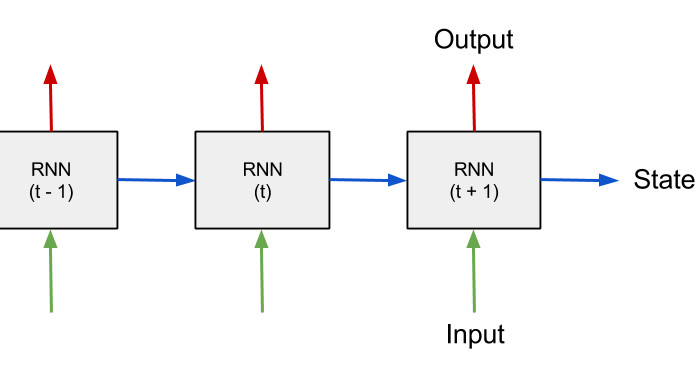

![4. Recurrent Neural Networks - Neural networks and deep learning [Book] 4. Recurrent Neural Networks - Neural networks and deep learning [Book]](https://www.oreilly.com/api/v2/epubs/9781492037354/files/assets/mlst_1404.png)