Simple working example how to use packing for variable-length sequence inputs for rnn - PyTorch Forums

Minimal tutorial on packing (pack_padded_sequence) and unpacking (pad_packed_sequence) sequences in pytorch. · GitHub

GitHub - HarshTrivedi/packing-unpacking-pytorch-minimal-tutorial: Minimal tutorial on packing and unpacking sequences in pytorch

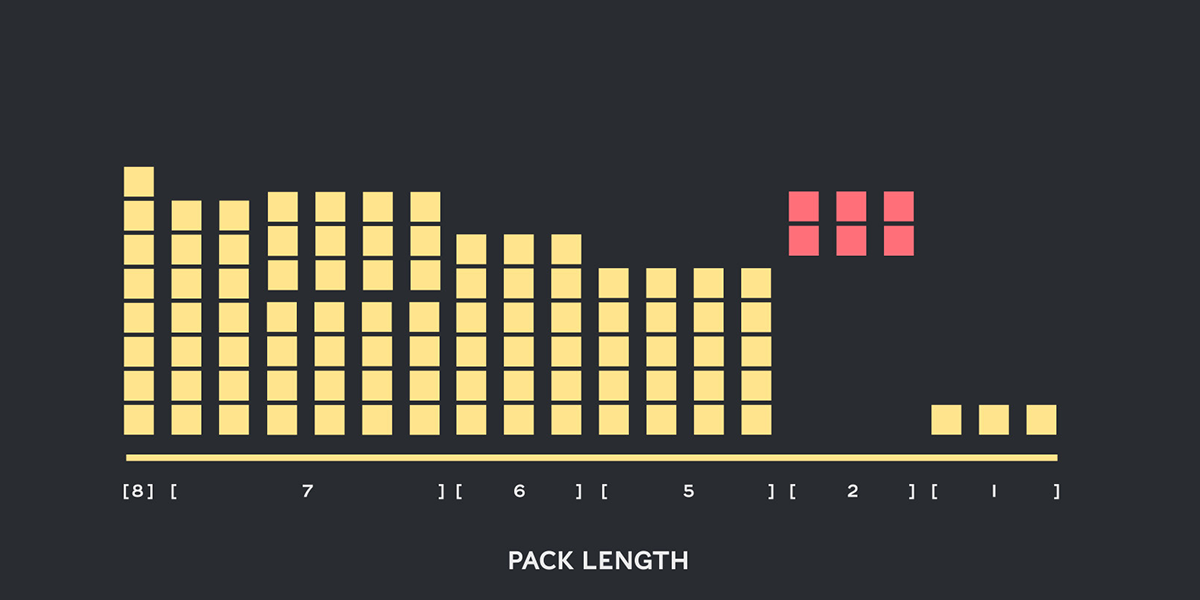

RNN Language Modelling with PyTorch — Packed Batching and Tied Weights | by Florijan Stamenković | Medium

pytorch-seq2seq/4 - Packed Padded Sequences, Masking, Inference and BLEU.ipynb at master · bentrevett/pytorch-seq2seq · GitHub

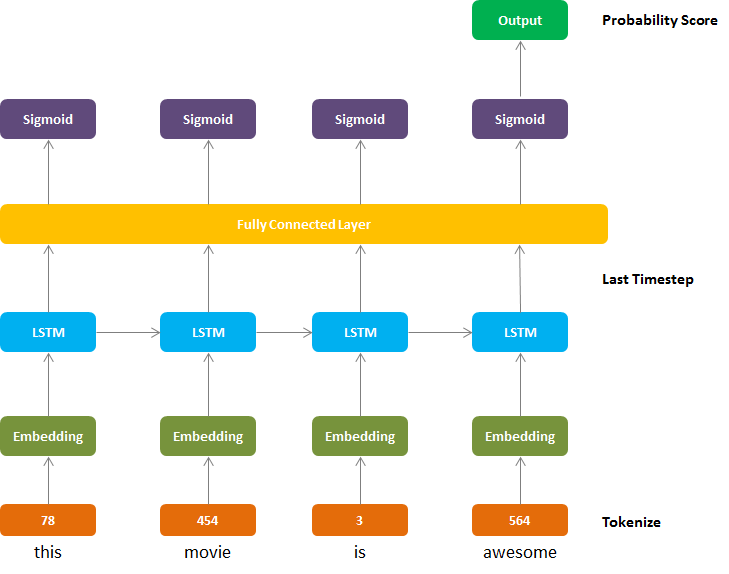

Simple working example how to use packing for variable-length sequence inputs for rnn - PyTorch Forums

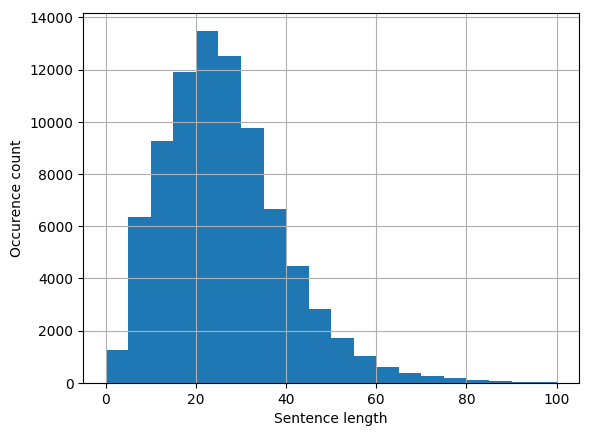

Introducing Packed BERT for 2x Training Speed-up in Natural Language Processing | by Dr. Mario Michael Krell | Towards Data Science

Do we need to set a fixed input sentence length when we use padding-packing with RNN? - nlp - PyTorch Forums

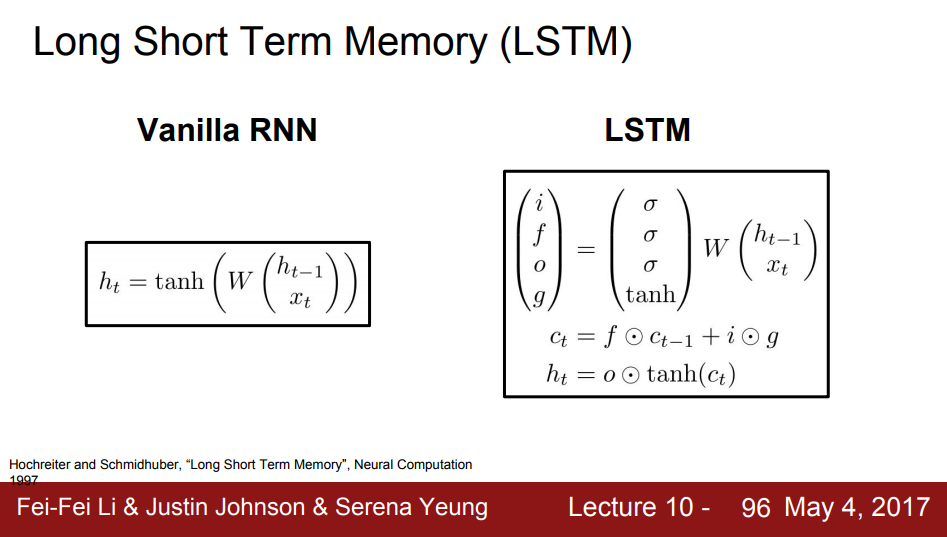

machine learning - How is batching normally performed for sequence data for an RNN/LSTM - Stack Overflow

RNN Language Modelling with PyTorch — Packed Batching and Tied Weights | by Florijan Stamenković | Medium